Loading AI tools

Single computer bus that connects the major components of a computer system From Wikipedia, the free encyclopedia

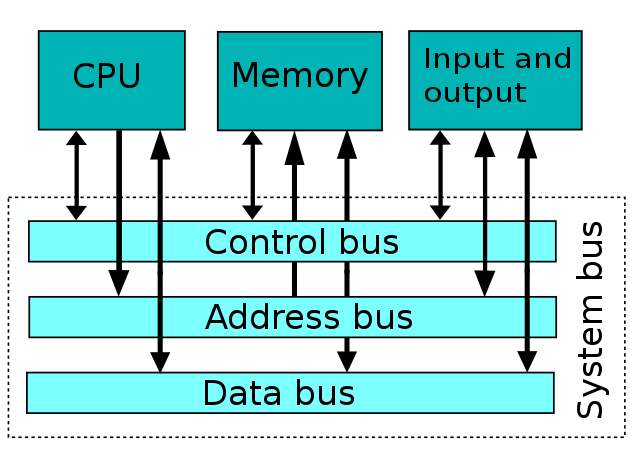

A system bus is a single computer bus that connects the major components of a computer system, combining the functions of a data bus to carry information, an address bus to determine where it should be sent or read from, and a control bus to determine its operation. The technique was developed to reduce costs and improve modularity, and although popular in the 1970s and 1980s, more modern computers use a variety of separate buses adapted to more specific needs.

The system level bus (as distinct from a CPU's internal datapath busses) connects the CPU to memory and I/O devices.[1] Typically a system level bus is designed for use as a backplane.[2]

Many of the computers were based on the First Draft of a Report on the EDVAC report published in 1945. In what became known as the Von Neumann architecture, a central control unit and arithmetic logic unit (ALU, which he called the central arithmetic part) were combined with computer memory and input and output functions to form a stored program computer.[3] The Report presented a general organization and theoretical model of the computer, however, not the implementation of that model.[4] Soon designs integrated the control unit and ALU into what became known as the central processing unit (CPU).

Computers in the 1950s and 1960s were generally constructed in an ad-hoc fashion. For example, the CPU, memory, and input/output units were each one or more cabinets connected by cables. Engineers used the common techniques of standardized bundles of wires and extended the concept as backplanes were used to hold printed circuit boards in these early machines. The name "bus" was already used for "bus bars" that carried electrical power to the various parts of electric machines, including early mechanical calculators.[5] The advent of integrated circuits vastly reduced the size of each computer unit, and buses became more standardized.[6] Standard modules could be interconnected in more uniform ways and were easier to develop and maintain.

To provide even more modularity with reduced cost, memory and I/O buses (and the required control and power buses) were sometimes combined into a single unified system bus.[7] Modularity and cost became important as computers became small enough to fit in a single cabinet (and customers expected similar price reductions). Digital Equipment Corporation (DEC) further reduced cost for mass-produced minicomputers, and memory-mapped I/O into the memory bus, so that the devices appeared to be memory locations. This was implemented in the Unibus of the PDP-11 around 1969, eliminating the need for a separate I/O bus.[8] Even computers such as the PDP-8 without memory-mapped I/O were soon implemented with a system bus, which allowed modules to be plugged into any slot.[9] Some authors called this a new streamlined "model" of computer architecture.[10]

Many early microcomputers (with a CPU generally on a single integrated circuit) were built with a single system bus, starting with the S-100 bus in the Altair 8800 computer system in about 1975.[11] The IBM PC used the Industry Standard Architecture (ISA) bus as its system bus in 1981. The passive backplanes of early models were replaced with the standard of putting the CPU and RAM on a motherboard, with only optional daughterboards or expansion cards in system bus slots.

The Multibus became a standard of the Institute of Electrical and Electronics Engineers as IEEE standard 796 in 1983.[12] Sun Microsystems developed the SBus in 1989 to support smaller expansion cards.[13] The easiest way to implement symmetric multiprocessing was to plug in more than one CPU into the shared system bus, which was used through the 1980s. However, the shared bus quickly became the bottleneck and more sophisticated connection techniques were explored.[14]

Even in very simple systems, at various times the data bus is driven by the program memory, by RAM, and by I/O devices. To prevent bus contention on the data bus, at any one instant only one device drives the data bus. In very simple systems, only the data bus is required to be a bidirectional bus. In very simple systems, the memory address register always drives the address bus, the control unit always drives the control bus, and an address decoder selects which particular device is allowed to drive the data bus during this bus cycle. In very simple systems, every instruction cycle starts with a READ memory cycle where program memory drives the instruction onto the data bus while the instruction register latches that instruction from the data bus. Some instructions continue with a WRITE memory cycle where the memory data register drives data onto the data bus into the chosen RAM or I/O device. Other instructions continue with another READ memory cycle where the chosen RAM, program memory, or I/O device drives data onto the data bus while the memory data register latches that data from the data bus.

More complex systems have a multi-master bus—not only do they have many devices that each drive the data bus, but also have many bus masters that each drive the address bus. The address bus as well as the data bus in bus snooping systems is required to be a bidirectional bus, often implemented as a three-state bus. To prevent bus contention on the address bus, a bus arbiter selects which particular bus master is allowed to drive the address bus during this bus cycle.

Intel has used the term Dual Independent Bus (DIB) for two different purposes. The first one came when Intel changed from a single local bus to the DIB, using the external front-side bus to the main system memory and I/O devices, and the internal back-side bus to the L2 CPU cache. This was introduced in the Pentium Pro in 1995.[15][16][17]

In 2005 and 2006 Intel introduced the 8500 and 5000 chipsets, where DIB referred to the two front-side buses on a chipset, which doubles the system bandwidth compared to having just one FSB shared by all the CPUs. However, the information needed to guarantee the cache coherence of shared data located in different caches have to be sent in broadcast (snooped) to check the other FSB's CPUs' cache state, reducing the available bandwidth. To reduce the coherency traffic, a snoop filter was included in the higher-end chipsets, in order to have cache state information available on-chipset. In 2007 Intel extended the idea of multiple buses in the 7300 chipset with four independent FSBs, calling it dedicated high-speed interconnects (DHSI).[18]

The system bus approach is obsolete in the modern personal and server computers, which instead use higher-performance interconnection technologies such as HyperTransport and Intel QuickPath Interconnect, while the system bus architecture continued to be used on simpler embedded microprocessors. The systems bus can even be internal to a single integrated circuit, producing a system-on-a-chip. Examples include AMBA, CoreConnect, and Wishbone.[19]

Direct Media Interface is an example of a system bus (besides directly accessed PCIE lanes) implemented by Intel and known since at least 2004. It's primarily used to access memory-mapped I/O devices and communicate CPU to the chipset.

Seamless Wikipedia browsing. On steroids.

Every time you click a link to Wikipedia, Wiktionary or Wikiquote in your browser's search results, it will show the modern Wikiwand interface.

Wikiwand extension is a five stars, simple, with minimum permission required to keep your browsing private, safe and transparent.