System on a chip

Micro-electronic component From Wikipedia, the free encyclopedia

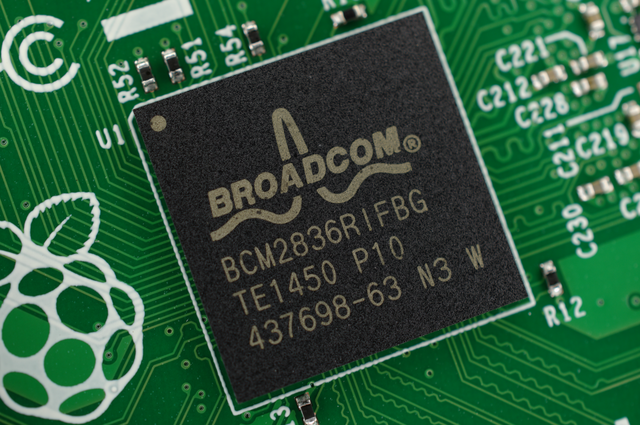

A system on a chip (SoC) is an integrated circuit that combines most or all key components of a computer or electronic system onto a single microchip.[1] Typically, an SoC includes a central processing unit (CPU) with memory, input/output, and data storage control functions, along with optional features like a graphics processing unit (GPU), Wi-Fi connectivity, and radio frequency processing. This high level of integration minimizes the need for separate, discrete components, thereby enhancing power efficiency and simplifying device design.

High-performance SoCs are often paired with dedicated memory, such as LPDDR, and flash storage chips, such as eUFS or eMMC, which may be stacked directly on top of the SoC in a package-on-package (PoP) configuration or placed nearby on the motherboard. Some SoCs also operate alongside specialized chips, such as cellular modems.[2]

Fundamentally, SoCs integrate one or more processor cores with critical peripherals. This comprehensive integration is conceptually similar to how a microcontroller is designed, but providing far greater computational power. While this unified design delivers lower power consumption and a reduced semiconductor die area compared to traditional multi-chip architectures, though at the cost of reduced modularity and component replaceability.

SoCs are ubiquitous in mobile computing, where compact, energy-efficient designs are critical. They power smartphones, tablets, and smartwatches, and are increasingly important in edge computing, where real-time data processing occurs close to the data source. By driving the trend toward tighter integration, SoCs have reshaped modern hardware design, reshaping the design landscape for modern computing devices.[3][4]

Types

In general, there are three distinguishable types of SoCs:

- SoCs built around a microcontroller,

- SoCs built around a microprocessor, often found in mobile phones;

- Specialized application-specific integrated circuit SoCs designed for specific applications that do not fit into the above two categories.

Applications

Summarize

Perspective

SoCs can be applied to any computing task. However, they are typically used in mobile computing such as tablets, smartphones, smartwatches, and netbooks as well as embedded systems and in applications where previously microcontrollers would be used.

Embedded systems

Where previously only microcontrollers could be used, SoCs are rising to prominence in the embedded systems market. Tighter system integration offers better reliability and mean time between failure, and SoCs offer more advanced functionality and computing power than microcontrollers.[5] Applications include AI acceleration, embedded machine vision,[6] data collection, telemetry, vector processing and ambient intelligence. Often embedded SoCs target the internet of things, multimedia, networking, telecommunications and edge computing markets. Some examples of SoCs for embedded applications include STM32, RP2040, and AMD Zynq 7000.

Mobile computing

Mobile computing based SoCs always bundle processors, memories, on-chip caches, wireless networking capabilities and often digital camera hardware and firmware. With increasing memory sizes, high end SoCs will often have no memory and flash storage and instead, the memory and flash memory will be placed right next to, or above (package on package), the SoC.[7] Some examples of mobile computing SoCs include:

- Samsung Electronics: list, typically based on ARM

- Qualcomm:

- Snapdragon (list), used in many smartphones. In 2018, Snapdragon SoCs were being used as the backbone of laptop computers running Windows 10, marketed as "Always Connected PCs".[8][9]

- MediaTek, typically based on ARM

- Dimensity & Kompanio Series. Standalone application & tablet processors that power devices such as Amazon Echo Show

Personal computers

In 1992, Acorn Computers produced the A3010, A3020 and A4000 range of personal computers with the ARM250 SoC. It combined the original Acorn ARM2 processor with a memory controller (MEMC), video controller (VIDC), and I/O controller (IOC). In previous Acorn ARM-powered computers, these were four discrete chips. The ARM7500 chip was their second-generation SoC, based on the ARM700, VIDC20 and IOMD controllers, and was widely licensed in embedded devices such as set-top-boxes, as well as later Acorn personal computers.

Tablet and laptop manufacturers have learned lessons from embedded systems and smartphone markets about reduced power consumption, better performance and reliability from tighter integration of hardware and firmware modules, and LTE and other wireless network communications integrated on chip (integrated network interface controllers).[10]

On modern laptops and mini PCs, the low-power variants of AMD Ryzen and Intel Core processors use SoC design integrating CPU, IGPU, chipset and other processors in a single package. However, such x86 processors still require external memory and storage chips.

Structure

Summarize

Perspective

An SoC consists of hardware functional units, including microprocessors that run software code, as well as a communications subsystem to connect, control, direct and interface between these functional modules.

Functional components

Processor cores

An SoC must have at least one processor core, but typically an SoC has more than one core. Processor cores can be a microcontroller, microprocessor (μP),[11] digital signal processor (DSP) or application-specific instruction set processor (ASIP) core.[12] ASIPs have instruction sets that are customized for an application domain and designed to be more efficient than general-purpose instructions for a specific type of workload. Multiprocessor SoCs have more than one processor core by definition. The ARM architecture is a common choice for SoC processor cores because some ARM-architecture cores are soft processors specified as IP cores.[11]

Memory

SoCs must have semiconductor memory blocks to perform their computation, as do microcontrollers and other embedded systems. Depending on the application, SoC memory may form a memory hierarchy and cache hierarchy. In the mobile computing market, this is common, but in many low-power embedded microcontrollers, this is not necessary. Memory technologies for SoCs include read-only memory (ROM), random-access memory (RAM), Electrically Erasable Programmable ROM (EEPROM) and flash memory.[11] As in other computer systems, RAM can be subdivided into relatively faster but more expensive static RAM (SRAM) and the slower but cheaper dynamic RAM (DRAM). When an SoC has a cache hierarchy, SRAM will usually be used to implement processor registers and cores' built-in caches whereas DRAM will be used for main memory. "Main memory" may be specific to a single processor (which can be multi-core) when the SoC has multiple processors, in this case it is distributed memory and must be sent via § Intermodule communication on-chip to be accessed by a different processor.[12] For further discussion of multi-processing memory issues, see cache coherence and memory latency.

Interfaces

SoCs include external interfaces, typically for communication protocols. These are often based upon industry standards such as USB, Ethernet, USART, SPI, HDMI, I²C, CSI, etc. These interfaces will differ according to the intended application. Wireless networking protocols such as Wi-Fi, Bluetooth, 6LoWPAN and near-field communication may also be supported.

When needed, SoCs include analog interfaces including analog-to-digital and digital-to-analog converters, often for signal processing. These may be able to interface with different types of sensors or actuators, including smart transducers. They may interface with application-specific modules or shields.[nb 1] Or they may be internal to the SoC, such as if an analog sensor is built in to the SoC and its readings must be converted to digital signals for mathematical processing.

Digital signal processors

Digital signal processor (DSP) cores are often included on SoCs. They perform signal processing operations in SoCs for sensors, actuators, data collection, data analysis and multimedia processing. DSP cores typically feature very long instruction word (VLIW) and single instruction, multiple data (SIMD) instruction set architectures, and are therefore highly amenable to exploiting instruction-level parallelism through parallel processing and superscalar execution.[12]: 4 SP cores most often feature application-specific instructions, and as such are typically application-specific instruction set processors (ASIP). Such application-specific instructions correspond to dedicated hardware functional units that compute those instructions.

Typical DSP instructions include multiply-accumulate, Fast Fourier transform, fused multiply-add, and convolutions.

Other

As with other computer systems, SoCs require timing sources to generate clock signals, control execution of SoC functions and provide time context to signal processing applications of the SoC, if needed. Popular time sources are crystal oscillators and phase-locked loops.

SoC peripherals including counter-timers, real-time timers and power-on reset generators. SoCs also include voltage regulators and power management circuits.

Intermodule communication

SoCs comprise many execution units. These units must often send data and instructions back and forth. Because of this, all but the most trivial SoCs require communications subsystems. Originally, as with other microcomputer technologies, data bus architectures were used, but recently designs based on sparse intercommunication networks known as networks-on-chip (NoC) have risen to prominence and are forecast to overtake bus architectures for SoC design in the near future.[13]

Bus-based communication

Historically, a shared global computer bus typically connected the different components, also called "blocks" of the SoC.[13] A very common bus for SoC communications is ARM's royalty-free Advanced Microcontroller Bus Architecture (AMBA) standard.

Direct memory access controllers route data directly between external interfaces and SoC memory, bypassing the CPU or control unit, thereby increasing the data throughput of the SoC. This is similar to some device drivers of peripherals on component-based multi-chip module PC architectures.

Wire delay is not scalable due to continued miniaturization, system performance does not scale with the number of cores attached, the SoC's operating frequency must decrease with each additional core attached for power to be sustainable, and long wires consume large amounts of electrical power. These challenges are prohibitive to supporting manycore systems on chip.[13]: xiii

Network on a chip

In the late 2010s, a trend of SoCs implementing communications subsystems in terms of a network-like topology instead of bus-based protocols has emerged. A trend towards more processor cores on SoCs has caused on-chip communication efficiency to become one of the key factors in determining the overall system performance and cost.[13]: xiii This has led to the emergence of interconnection networks with router-based packet switching known as "networks on chip" (NoCs) to overcome the bottlenecks of bus-based networks.[13]: xiii

Networks-on-chip have advantages including destination- and application-specific routing, greater power efficiency and reduced possibility of bus contention. Network-on-chip architectures take inspiration from communication protocols like TCP and the Internet protocol suite for on-chip communication,[13] although they typically have fewer network layers. Optimal network-on-chip network architectures are an ongoing area of much research interest. NoC architectures range from traditional distributed computing network topologies such as torus, hypercube, meshes and tree networks to genetic algorithm scheduling to randomized algorithms such as random walks with branching and randomized time to live (TTL).

Many SoC researchers consider NoC architectures to be the future of SoC design because they have been shown to efficiently meet power and throughput needs of SoC designs. Current NoC architectures are two-dimensional. 2D IC design has limited floorplanning choices as the number of cores in SoCs increase, so as three-dimensional integrated circuits (3DICs) emerge, SoC designers are looking towards building three-dimensional on-chip networks known as 3DNoCs.[13]

Design flow

Summarize

Perspective

This section needs additional citations for verification. (March 2017) |

A system on a chip consists of both the hardware, described in § Structure, and the software controlling the microcontroller, microprocessor or digital signal processor cores, peripherals and interfaces. The design flow for an SoC aims to develop this hardware and software at the same time, also known as architectural co-design. The design flow must also take into account optimizations (§ Optimization goals) and constraints.

Most SoCs are developed from pre-qualified hardware component IP core specifications for the hardware elements and execution units, collectively "blocks", described above, together with software device drivers that may control their operation. Of particular importance are the protocol stacks that drive industry-standard interfaces like USB. The hardware blocks are put together using computer-aided design tools, specifically electronic design automation tools; the software modules are integrated using a software integrated development environment.

SoCs components are also often designed in high-level programming languages such as C++, MATLAB or SystemC and converted to RTL designs through high-level synthesis (HLS) tools such as C to HDL or flow to HDL.[14] HLS products called "algorithmic synthesis" allow designers to use C++ to model and synthesize system, circuit, software and verification levels all in one high level language commonly known to computer engineers in a manner independent of time scales, which are typically specified in HDL.[15] Other components can remain software and be compiled and embedded onto soft-core processors included in the SoC as modules in HDL as IP cores.

Once the architecture of the SoC has been defined, any new hardware elements are written in an abstract hardware description language termed register transfer level (RTL) which defines the circuit behavior, or synthesized into RTL from a high level language through high-level synthesis. These elements are connected together in a hardware description language to create the full SoC design. The logic specified to connect these components and convert between possibly different interfaces provided by different vendors is called glue logic.

Design verification

Chips are verified for validation correctness before being sent to a semiconductor foundry. This process is called functional verification and it accounts for a significant portion of the time and energy expended in the chip design life cycle, often quoted as 70%.[16][17] With the growing complexity of chips, hardware verification languages like SystemVerilog, SystemC, e, and OpenVera are being used. Bugs found in the verification stage are reported to the designer.

Traditionally, engineers have employed simulation acceleration, emulation or prototyping on reprogrammable hardware to verify and debug hardware and software for SoC designs prior to the finalization of the design, known as tape-out. Field-programmable gate arrays (FPGAs) are favored for prototyping SoCs because FPGA prototypes are reprogrammable, allow debugging and are more flexible than application-specific integrated circuits (ASICs).[18][19]

With high capacity and fast compilation time, simulation acceleration and emulation are powerful technologies that provide wide visibility into systems. Both technologies, however, operate slowly, on the order of MHz, which may be significantly slower – up to 100 times slower – than the SoC's operating frequency. Acceleration and emulation boxes are also very large and expensive at over US$1 million.[citation needed]

FPGA prototypes, in contrast, use FPGAs directly to enable engineers to validate and test at, or close to, a system's full operating frequency with real-world stimuli. Tools such as Certus[20] are used to insert probes in the FPGA RTL that make signals available for observation. This is used to debug hardware, firmware and software interactions across multiple FPGAs with capabilities similar to a logic analyzer.

In parallel, the hardware elements are grouped and passed through a process of logic synthesis, during which performance constraints, such as operational frequency and expected signal delays, are applied. This generates an output known as a netlist describing the design as a physical circuit and its interconnections. These netlists are combined with the glue logic connecting the components to produce the schematic description of the SoC as a circuit which can be printed onto a chip. This process is known as place and route and precedes tape-out in the event that the SoCs are produced as application-specific integrated circuits (ASIC).

Optimization goals

Summarize

Perspective

SoCs must optimize power use, area on die, communication, positioning for locality between modular units and other factors. Optimization is necessarily a design goal of SoCs. If optimization was not necessary, the engineers would use a multi-chip module architecture without accounting for the area use, power consumption or performance of the system to the same extent.

Common optimization targets for SoC designs follow, with explanations of each. In general, optimizing any of these quantities may be a hard combinatorial optimization problem, and can indeed be NP-hard fairly easily. Therefore, sophisticated optimization algorithms are often required and it may be practical to use approximation algorithms or heuristics in some cases. Additionally, most SoC designs contain multiple variables to optimize simultaneously, so Pareto efficient solutions are sought after in SoC design. Oftentimes the goals of optimizing some of these quantities are directly at odds, further adding complexity to design optimization of SoCs and introducing trade-offs in system design.

For broader coverage of trade-offs and requirements analysis, see requirements engineering.

Targets

Power consumption

SoCs are optimized to minimize the electrical power used to perform the SoC's functions. Most SoCs must use low power. SoC systems often require long battery life (such as smartphones), can potentially spend months or years without a power source while needing to maintain autonomous function, and often are limited in power use by a high number of embedded SoCs being networked together in an area. Additionally, energy costs can be high and conserving energy will reduce the total cost of ownership of the SoC. Finally, waste heat from high energy consumption can damage other circuit components if too much heat is dissipated, giving another pragmatic reason to conserve energy. The amount of energy used in a circuit is the integral of power consumed with respect to time, and the average rate of power consumption is the product of current by voltage. Equivalently, by Ohm's law, power is current squared times resistance or voltage squared divided by resistance:

SoCs are frequently embedded in portable devices such as smartphones, GPS navigation devices, digital watches (including smartwatches) and netbooks. Customers want long battery lives for mobile computing devices, another reason that power consumption must be minimized in SoCs. Multimedia applications are often executed on these devices, including video games, video streaming, image processing; all of which have grown in computational complexity in recent years with user demands and expectations for higher-quality multimedia. Computation is more demanding as expectations move towards 3D video at high resolution with multiple standards, so SoCs performing multimedia tasks must be computationally capable platform while being low power to run off a standard mobile battery.[12]: 3

Performance per watt

SoCs are optimized to maximize power efficiency in performance per watt: maximize the performance of the SoC given a budget of power usage. Many applications such as edge computing, distributed processing and ambient intelligence require a certain level of computational performance, but power is limited in most SoC environments.

Waste heat

SoC designs are optimized to minimize waste heat output on the chip. As with other integrated circuits, heat generated due to high power density are the bottleneck to further miniaturization of components.[21]: 1 The power densities of high speed integrated circuits, particularly microprocessors and including SoCs, have become highly uneven. Too much waste heat can damage circuits and erode reliability of the circuit over time. High temperatures and thermal stress negatively impact reliability, stress migration, decreased mean time between failures, electromigration, wire bonding, metastability and other performance degradation of the SoC over time.[21]: 2–9

In particular, most SoCs are in a small physical area or volume and therefore the effects of waste heat are compounded because there is little room for it to diffuse out of the system. Because of high transistor counts on modern devices, oftentimes a layout of sufficient throughput and high transistor density is physically realizable from fabrication processes but would result in unacceptably high amounts of heat in the circuit's volume.[21]: 1

These thermal effects force SoC and other chip designers to apply conservative design margins, creating less performant devices to mitigate the risk of catastrophic failure. Due to increased transistor densities as length scales get smaller, each process generation produces more heat output than the last. Compounding this problem, SoC architectures are usually heterogeneous, creating spatially inhomogeneous heat fluxes, which cannot be effectively mitigated by uniform passive cooling.[21]: 1

Throughput

This section needs expansion. You can help by adding to it. (October 2018) |

SoCs are optimized to maximize computational and communications throughput.

Latency

This section needs expansion. You can help by adding to it. (October 2018) |

SoCs are optimized to minimize latency for some or all of their functions. This can be accomplished by laying out elements with proper proximity and locality to each-other to minimize the interconnection delays and maximize the speed at which data is communicated between modules, functional units and memories. In general, optimizing to minimize latency is an NP-complete problem equivalent to the Boolean satisfiability problem.

For tasks running on processor cores, latency and throughput can be improved with task scheduling. Some tasks run in application-specific hardware units, however, and even task scheduling may not be sufficient to optimize all software-based tasks to meet timing and throughput constraints.

Methodologies

This section needs expansion. You can help by adding to it. (October 2018) |

Systems on chip are modeled with standard hardware verification and validation techniques, but additional techniques are used to model and optimize SoC design alternatives to make the system optimal with respect to multiple-criteria decision analysis on the above optimization targets.

Task scheduling

Task scheduling is an important activity in any computer system with multiple processes or threads sharing a single processor core. It is important to reduce § Latency and increase § Throughput for embedded software running on an SoC's § Processor cores. Not every important computing activity in a SoC is performed in software running on on-chip processors, but scheduling can drastically improve performance of software-based tasks and other tasks involving shared resources.

Software running on SoCs often schedules tasks according to network scheduling and randomized scheduling algorithms.

Pipelining

Hardware and software tasks are often pipelined in processor design. Pipelining is an important principle for speedup in computer architecture. They are frequently used in GPUs (graphics pipeline) and RISC processors (evolutions of the classic RISC pipeline), but are also applied to application-specific tasks such as digital signal processing and multimedia manipulations in the context of SoCs.[12]

Probabilistic modeling

SoCs are often analyzed though probabilistic models, queueing networks, and Markov chains. For instance, Little's law allows SoC states and NoC buffers to be modeled as arrival processes and analyzed through Poisson random variables and Poisson processes.

Markov chains

SoCs are often modeled with Markov chains, both discrete time and continuous time variants. Markov chain modeling allows asymptotic analysis of the SoC's steady state distribution of power, heat, latency and other factors to allow design decisions to be optimized for the common case.

Fabrication

Summarize

Perspective

This section needs additional citations for verification. (March 2017) |

SoC chips are typically fabricated using metal–oxide–semiconductor (MOS) technology.[22] The netlists described above are used as the basis for the physical design (place and route) flow to convert the designers' intent into the design of the SoC. Throughout this conversion process, the design is analyzed with static timing modeling, simulation and other tools to ensure that it meets the specified operational parameters such as frequency, power consumption and dissipation, functional integrity (as described in the register transfer level code) and electrical integrity.

When all known bugs have been rectified and these have been re-verified and all physical design checks are done, the physical design files describing each layer of the chip are sent to the foundry's mask shop where a full set of glass lithographic masks will be etched. These are sent to a wafer fabrication plant to create the SoC dice before packaging and testing.

SoCs can be fabricated by several technologies, including:

- Full custom ASIC

- Standard cell ASIC

- Field-programmable gate array (FPGA)

ASICs consume less power and are faster than FPGAs but cannot be reprogrammed and are expensive to manufacture. FPGA designs are more suitable for lower volume designs, but after enough units of production ASICs reduce the total cost of ownership.[23]

SoC designs consume less power and have a lower cost and higher reliability than the multi-chip systems that they replace. With fewer packages in the system, assembly costs are reduced as well.

However, like most very-large-scale integration (VLSI) designs, the total cost[clarification needed] is higher for one large chip than for the same functionality distributed over several smaller chips, because of lower yields[clarification needed] and higher non-recurring engineering costs.

When it is not feasible to construct an SoC for a particular application, an alternative is a system in package (SiP) comprising a number of chips in a single package. When produced in large volumes, SoC is more cost-effective than SiP because its packaging is simpler.[24] Another reason SiP may be preferred is waste heat may be too high in a SoC for a given purpose because functional components are too close together, and in an SiP heat will dissipate better from different functional modules since they are physically further apart.

Examples

Some examples of systems on a chip are:

- Apple A series

- Cell processor

- Adapteva's Epiphany architecture

- Xilinx Zynq UltraScale

- Qualcomm Snapdragon

Benchmarks

This section needs expansion. You can help by adding to it. (October 2018) |

SoC research and development often compares many options. Benchmarks, such as COSMIC,[25] are developed to help such evaluations.

See also

- Chiplet

- List of system on a chip suppliers

- Post-silicon validation

- ARM architecture family

- RISC-V

- Single-board computer

- System in a package

- Network on a chip

- Cypress PSoC

- Application-specific instruction set processor (ASIP)

- Platform-based design

- Lab-on-a-chip

- Organ-on-a-chip in biomedical technology

- Multi-chip module

- Parallel computing

- ARM big.LITTLE co-architecture

- Hardware acceleration

Notes

- In embedded systems, "shields" are analogous to expansion cards for PCs. They often fit over a microcontroller such as an Arduino or single-board computer such as the Raspberry Pi and function as peripherals for the device.

References

Further reading

External links

Wikiwand - on

Seamless Wikipedia browsing. On steroids.