Lucky imaging

Technique for astrophotography From Wikipedia, the free encyclopedia

Lucky imaging (also called lucky exposures) is one form of speckle imaging used for astrophotography. Speckle imaging techniques use a high-speed camera with exposure times short enough (100 ms or less) so that the changes in the Earth's atmosphere during the exposure are minimal.

With lucky imaging, those optimum exposures least affected by the atmosphere (typically around 10%) are chosen and combined into a single image by shifting and adding the short exposures, yielding much higher angular resolution than would be possible with a single, longer exposure, which includes all the frames.

Explanation

Images taken with ground-based telescopes are subject to the blurring effect of atmospheric turbulence (seen to the eye as the stars twinkling). Many astronomical imaging programs require higher resolution than is possible without some correction of the images. Lucky imaging is one of several methods used to remove atmospheric blurring. Used at a 1% selection or less, lucky imaging can reach the diffraction limit of even 2.5 m aperture telescopes, a resolution improvement factor of at least five over standard imaging systems.

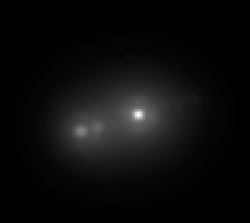

- Zeta Bootis imaged with the Nordic Optical Telescope on 13 May 2000 using the lucky imaging method. (The Airy discs around the stars are diffraction from the 2.56 m telescope aperture.)

- Typical short-exposure image of this binary star from the same dataset, but without using any speckle processing. The effect of the Earth's atmosphere is to break the image of each star up into speckles.

Demonstration of the principle

Summarize

Perspective

The sequence of images below shows how lucky imaging works.[1] From a series of 50,000 images taken at a speed of almost 40 images per second, five different long exposure images have been created. Additionally, a single exposure with very low image quality and another single exposure with very high image quality are shown at the beginning of the demo sequence. The astronomical target shown has the 2MASS ID J03323578+2843554. North is up and East on the left.

|

Single exposure with low image quality, not selected for lucky imaging. |  |

Single exposure with very high image quality, selected for lucky imaging. |

|

This image shows the average of all 50,000 images, which is almost the same as the 21 minutes (50,000/40 seconds) long exposure seeing limited image. It looks like a typical star image, slightly elongated. The full width at half maximum (FWHM) of the seeing disk is around 0.9 arcsec. |  |

This image shows the average of all 50,000 single images but here with the center of gravity (centroid) of each image shifted to the same reference position. This is the tip-tilt-corrected, or image-stabilized, long-exposure image. It already shows more details — two objects — than the seeing-limited image. |

|

This image shows the 25,000 (50% selection) best images averaged, after the brightest pixel in each image was moved to the same reference position. In this image, we can almost see three objects. |  |

This image shows the 5,000 (10% selection) best images averaged, after the brightest pixel in each image was moved to the same reference position. The surrounding seeing halo is further reduced, an Airy ring around the brightest object becomes clearly visible. |

|

This image shows the 500 (1% selection) best images averaged, after the brightest pixel in each image was moved to the same reference position. The seeing halo is further reduced. The signal-to-noise ratio of the brightest object is the highest in this image. |

The difference between the seeing limited image (third image from top) and the best 1% images selected result is quite remarkable: a triple system has been detected. The brightest component in the West is a V=14.9 magnitude M4V star. This component is the lucky imaging reference source. The weaker component consists of two stars of spectral classes M4.5 and M5.5.[2] The distance of the system is about 45 parsecs (pc). Airy rings can be seen, which indicates that the diffraction limit of the Calar Alto Observatory's 2.2 m telescope was reached. The signal to noise ratio of the point sources increases with stronger selection. The seeing halo on the other side is more suppressed. The separation between the two brightest objects is around 0.53 arcsec and between the two faintest objects less than 0.16 arcsec. At a distance of 45 pc this corresponds to 7.2 times the distance between Earth and Sun, around 1 billion kilometers (109 km).

History

Summarize

Perspective

Lucky imaging methods were first used in the middle 20th century, and became popular for imaging planets in the 1950s and 1960s (using cine cameras, often with image intensifiers). For the most part it took 30 years for the separate imaging technologies to be perfected for this counter-intuitive imaging technology to become practical. The first numerical calculation of the probability of obtaining lucky exposures was an article by David L. Fried in 1978.[3]

In early applications of lucky imaging, it was generally assumed that the atmosphere smeared-out or blurred the astronomical images.[4] In that work, the full width at half maximum (FWHM) of the blurring was estimated, and used to select exposures. Later studies[5][6] took advantage of the fact that the atmosphere does not blur astronomical images, but generally produces multiple sharp copies of the image (the point spread function has speckles). New methods were used which took advantage of this to produce much higher quality images than had been obtained assuming the image to be smeared.

In the early years of the 21st century, it was realised that turbulent intermittency (and the fluctuations in astronomical seeing conditions it produced)[7] could substantially increase the probability of obtaining a "lucky exposure" for given average astronomical seeing conditions.[8][9]

Lucky imaging and adaptive optics hybrid systems

Summarize

Perspective

In 2007 astronomers at Caltech and the University of Cambridge announced the first results from a new hybrid lucky imaging and adaptive optics (AO) system. The new camera gave the first diffraction-limited resolutions on 5 m-class telescopes in visible light. The research was performed on the Mt. Palomar Hale Telescope of 200-inch-diameter aperture. The telescope, with lucky cam and adaptive optics, pushed it near its theoretical angular resolution, achieving up to 0.025 arc seconds for certain types of viewing.[10] Compared to space telescopes like the 2.4 m Hubble, the system still has some drawbacks including a narrow field of view for crisp images (typically 10" to 20"), airglow, and electromagnetic frequencies blocked by the atmosphere.

When combined with an AO system, lucky imaging selects the periods when the turbulence the adaptive optics system must correct is reduced. In these periods, lasting a small fraction of a second, the correction given by the AO system is sufficient to give excellent resolution with visible light. The lucky imaging system averages the images taken during the excellent periods to produce a final image with much higher resolution than is possible with a conventional long-exposure AO camera.

This technique is applicable to getting very high resolution images of only relatively small astronomical objects, up to 10 arcseconds in diameter, as it is limited by the precision of the atmospheric turbulence correction. It also requires a relatively bright 14th-magnitude star in the field of view on which to guide. Being above the atmosphere, the Hubble Space Telescope is not limited by these concerns and so is capable of much wider-field high-resolution imaging.

Popularity of technique

Summarize

Perspective

Both amateur and professional astronomers have begun to use this technique. Modern webcams and camcorders have the ability to capture rapid short exposures with sufficient sensitivity for astrophotography, and these devices are used with a telescope and the shift-and-add method from speckle imaging (also known as image stacking) to achieve previously unattainable resolution. If some of the images are discarded, then this type of video astronomy is called lucky imaging.

Many methods exist for image selection, including the Strehl-selection method first suggested[11] by John E. Baldwin from the Cambridge group[12] and the image contrast selection used in the Selective Image Reconstruction method of Ron Dantowitz.[13]

The development and availability of electron-multiplying CCDs (EMCCD, also known as LLLCCD, L3CCD, or low-light-level CCD) has allowed the first high-quality lucky imaging of faint objects.

On October 27, 2014, Google introduced a similar technique called HDR+. HDR+ takes a burst of shots with short exposures, selectively aligning the sharpest shots and averaging them using computational photography techniques. Short exposures avoid blurry images or blowing out highlights, and averaging multiple shots reduces noise.[14] HDR+ is processed on hardware accelerators including the Qualcomm Hexagon DSPs and Pixel Visual Core.[15]

Alternative methods

Other approaches that can yield resolving power exceeding the limits of atmospheric seeing include adaptive optics, interferometry, other forms of speckle imaging and space-based telescopes such as NASA's Hubble Space Telescope.

References

Further reading

External links

Wikiwand - on

Seamless Wikipedia browsing. On steroids.