Top Qs

Timeline

Chat

Perspective

Dynamic time warping

Algorithm for measuring similarity between temporal sequences From Wikipedia, the free encyclopedia

Remove ads

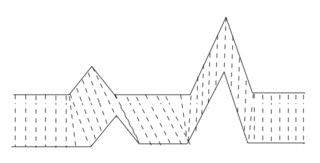

In time series analysis, dynamic time warping (DTW) is an algorithm for measuring similarity between two temporal sequences, which may vary in speed. For instance, similarities in walking could be detected using DTW, even if one person was walking faster than the other, or if there were accelerations and decelerations during the course of an observation. DTW has been applied to temporal sequences of video, audio, and graphics data — indeed, any data that can be turned into a one-dimensional sequence can be analyzed with DTW. A well-known application has been automatic speech recognition, to cope with different speaking speeds. Other applications include speaker recognition and online signature recognition. It can also be used in partial shape matching applications.

In general, DTW is a method that calculates an optimal match between two given sequences (e.g. time series) with certain restriction and rules:

- Every index from the first sequence must be matched with one or more indices from the other sequence, and vice versa

- The first index from the first sequence must be matched with the first index from the other sequence (but it does not have to be its only match)

- The last index from the first sequence must be matched with the last index from the other sequence (but it does not have to be its only match)

- The mapping of the indices from the first sequence to indices from the other sequence must be monotonically increasing, and vice versa, i.e. if are indices from the first sequence, then there must not be two indices in the other sequence, such that index is matched with index and index is matched with index , and vice versa

We can plot each match between the sequences and as a path in a matrix from to , such that each step is one of . In this formulation, we see that the number of possible matches is the Delannoy number.

The optimal match is denoted by the match that satisfies all the restrictions and the rules and that has the minimal cost, where the cost is computed as the sum of absolute differences, for each matched pair of indices, between their values.

The sequences are "warped" non-linearly in the time dimension to determine a measure of their similarity independent of certain non-linear variations in the time dimension. This sequence alignment method is often used in time series classification. Although DTW measures a distance-like quantity between two given sequences, it doesn't guarantee the triangle inequality to hold.

In addition to a similarity measure between the two sequences (a so called "warping path" is produced), by warping according to this path the two signals may be aligned in time. The signal with an original set of points X(original), Y(original) is transformed to X(warped), Y(warped). This finds applications in genetic sequence and audio synchronisation. In a related technique sequences of varying speed may be averaged using this technique see the average sequence section.

This is conceptually very similar to the Needleman–Wunsch algorithm.

Remove ads

Implementation

Summarize

Perspective

This example illustrates the implementation of the dynamic time warping algorithm when the two sequences s and t are strings of discrete symbols. For two symbols x and y, is a distance between the symbols, e.g., .

int DTWDistance(s: array [1..n], t: array [1..m]) {

DTW := array [0..n, 0..m]

for i := 0 to n

for j := 0 to m

DTW[i, j] := infinity

DTW[0, 0] := 0

for i := 1 to n

for j := 1 to m

cost := d(s[i], t[j])

DTW[i, j] := cost + minimum(DTW[i-1, j ], // insertion

DTW[i , j-1], // deletion

DTW[i-1, j-1]) // match

return DTW[n, m]

}

where DTW[i, j] is the distance between s[1:i] and t[1:j] with the best alignment.

We sometimes want to add a locality constraint. That is, we require that if s[i] is matched with t[j], then is no larger than w, a window parameter.

We can easily modify the above algorithm to add a locality constraint (differences marked). However, the above given modification works only if is no larger than w, i.e. the end point is within the window length from diagonal. In order to make the algorithm work, the window parameter w must be adapted so that (see the line marked with (*) in the code).

int DTWDistance(s: array [1..n], t: array [1..m], w: int) {

DTW := array [0..n, 0..m]

w := max(w, abs(n-m)) // adapt window size (*)

for i := 0 to n

for j:= 0 to m

DTW[i, j] := infinity

DTW[0, 0] := 0

for i := 1 to n

for j := max(1, i-w) to min(m, i+w)

DTW[i, j] := 0

for i := 1 to n

for j := max(1, i-w) to min(m, i+w)

cost := d(s[i], t[j])

DTW[i, j] := cost + minimum(DTW[i-1, j ], // insertion

DTW[i , j-1], // deletion

DTW[i-1, j-1]) // match

return DTW[n, m]

}

Remove ads

Warping properties

The DTW algorithm produces a discrete matching between existing elements of one series to another. In other words, it does not allow time-scaling of segments within the sequence. Other methods allow continuous warping. For example, Correlation Optimized Warping (COW) divides the sequence into uniform segments that are scaled in time using linear interpolation, to produce the best matching warping. The segment scaling causes potential creation of new elements, by time-scaling segments either down or up, and thus produces a more sensitive warping than DTW's discrete matching of raw elements.

Remove ads

Complexity

The time complexity of the DTW algorithm is , where and are the lengths of the two input sequences. The 50 years old quadratic time bound was broken in 2016: an algorithm due to Gold and Sharir enables computing DTW in time and space for two input sequences of length .[2] This algorithm can also be adapted to sequences of different lengths. Despite this improvement, it was shown that a strongly subquadratic running time of the form for some cannot exist unless the Strong exponential time hypothesis fails.[3][4]

While the dynamic programming algorithm for DTW requires space in a naive implementation, the space consumption can be reduced to using Hirschberg's algorithm.

Fast computation

Fast techniques for computing DTW include PrunedDTW,[5] SparseDTW,[6] FastDTW,[7] and the MultiscaleDTW.[8][9]

A common task, retrieval of similar time series, can be accelerated by using lower bounds such as LB_Keogh,[10] LB_Improved,[11] or LB_Petitjean.[12] However, the Early Abandon and Pruned DTW algorithm reduces the degree of acceleration that lower bounding provides and sometimes renders it ineffective.

In a survey, Wang et al. reported slightly better results with the LB_Improved lower bound than the LB_Keogh bound, and found that other techniques were inefficient.[13] Subsequent to this survey, the LB_Enhanced bound was developed that is always tighter than LB_Keogh while also being more efficient to compute.[14] LB_Petitjean is the tightest known lower bound that can be computed in linear time.[12]

Remove ads

Average sequence

Averaging for dynamic time warping is the problem of finding an average sequence for a set of sequences. NLAAF[15] is an exact method to average two sequences using DTW. For more than two sequences, the problem is related to that of multiple alignment and requires heuristics. DBA[16] is currently a reference method to average a set of sequences consistently with DTW. COMASA[17] efficiently randomizes the search for the average sequence, using DBA as a local optimization process.

Remove ads

Supervised learning

A nearest-neighbour classifier can achieve state-of-the-art performance when using dynamic time warping as a distance measure.[18]

Amerced Dynamic Time Warping

Amerced Dynamic Time Warping (ADTW) is a variant of DTW designed to better control DTW's permissiveness in the alignments that it allows.[19] The windows that classical DTW uses to constrain alignments introduce a step function. Any warping of the path is allowed within the window and none beyond it. In contrast, ADTW employs an additive penalty that is incurred each time that the path is warped. Any amount of warping is allowed, but each warping action incurs a direct penalty. ADTW significantly outperforms DTW with windowing when applied as a nearest neighbor classifier on a set of benchmark time series classification tasks.[19]

Remove ads

Alternative approaches

Summarize

Perspective

In functional data analysis, time series are regarded as discretizations of smooth (differentiable) functions of time. By viewing the observed samples at smooth functions, one can utilize continuous mathematics for analyzing data.[20] Smoothness and monotonicity of time warp functions may be obtained for instance by integrating a time-varying radial basis function, thus being a one-dimensional diffeomorphism.[21] Optimal nonlinear time warping functions are computed by minimizing a measure of distance of the set of functions to their warped average. Roughness penalty terms for the warping functions may be added, e.g., by constraining the size of their curvature. The resultant warping functions are smooth, which facilitates further processing. This approach has been successfully applied to analyze patterns and variability of speech movements.[22][23]

Another related approach are hidden Markov models (HMM) and it has been shown that the Viterbi algorithm used to search for the most likely path through the HMM is equivalent to stochastic DTW.[24][25][26]

DTW and related warping methods are typically used as pre- or post-processing steps in data analyses. If the observed sequences contain both random variation in both their values, shape of observed sequences and random temporal misalignment, the warping may overfit to noise leading to biased results. A simultaneous model formulation with random variation in both values (vertical) and time-parametrization (horizontal) is an example of a nonlinear mixed-effects model.[27] In human movement analysis, simultaneous nonlinear mixed-effects modeling has been shown to produce superior results compared to DTW.[28]

Remove ads

Open-source software

- The tempo C++ library with Python bindings implements Early Abandoned and Pruned DTW as well as Early Abandoned and Pruned ADTW and DTW lower bounds LB_Keogh, LB_Enhanced and LB_Webb.

- The UltraFastMPSearch Java library implements the UltraFastWWSearch algorithm[29] for fast warping window tuning.

- The lbimproved C++ library implements Fast Nearest-Neighbor Retrieval algorithms under the GNU General Public License (GPL). It also provides a C++ implementation of dynamic time warping, as well as various lower bounds.

- The FastDTW library is a Java implementation of DTW and a FastDTW implementation that provides optimal or near-optimal alignments with an O(N) time and memory complexity, in contrast to the O(N2) requirement for the standard DTW algorithm. FastDTW uses a multilevel approach that recursively projects a solution from a coarser resolution and refines the projected solution.

- FastDTW fork (Java) published to Maven Central.

- time-series-classification (Java) a package for time series classification using DTW in Weka.

- The DTW suite provides Python (dtw-python) and R packages (dtw) with a comprehensive coverage of the DTW algorithm family members, including a variety of recursion rules (also called step patterns), constraints, and substring matching.

- The mlpy Python library implements DTW.

- The pydtw Python library implements the Manhattan and Euclidean flavoured DTW measures including the LB_Keogh lower bounds.

- The cudadtw C++/CUDA library implements subsequence alignment of Euclidean-flavoured DTW and z-normalized Euclidean distance similar to the popular UCR-Suite on CUDA-enabled accelerators.

- The JavaML machine learning library implements DTW.

- The ndtw C# library implements DTW with various options.

- Sketch-a-Char uses Greedy DTW (implemented in JavaScript) as part of LaTeX symbol classifier program.

- The MatchBox implements DTW to match mel-frequency cepstral coefficients of audio signals.

- Sequence averaging: a GPL Java implementation of DBA.[16]

- The Gesture Recognition Toolkit|GRT C++ real-time gesture-recognition toolkit implements DTW.

- The PyHubs software package implements DTW and nearest-neighbour classifiers, as well as their extensions (hubness-aware classifiers).

- The simpledtw Python library implements the classic O(NM) Dynamic Programming algorithm and bases on Numpy. It supports values of any dimension, as well as using custom norm functions for the distances. It is licensed under the MIT license.

- The tslearn Python library implements DTW in the time-series context.

- The cuTWED CUDA Python library implements a state of the art improved Time Warp Edit Distance using only linear memory with phenomenal speedups.

- DynamicAxisWarping.jl Is a Julia implementation of DTW and related algorithms such as FastDTW, SoftDTW, GeneralDTW and DTW barycenters.

- The Multi_DTW implements DTW to match two 1-D arrays or 2-D speech files (2-D array).

- The dtwParallel (Python) package incorporates the main functionalities available in current DTW libraries and novel functionalities such as parallelization, computation of similarity (kernel-based) values, and consideration of data with different types of features (categorical, real-valued, etc.).[30]

Remove ads

Applications

Spoken-word recognition

Due to different speaking rates, a non-linear fluctuation occurs in speech pattern versus time axis, which needs to be eliminated.[31] DP matching is a pattern-matching algorithm based on dynamic programming (DP), which uses a time-normalization effect, where the fluctuations in the time axis are modeled using a non-linear time-warping function. Considering any two speech patterns, we can get rid of their timing differences by warping the time axis of one so that the maximal coincidence is attained with the other. Moreover, if the warping function is allowed to take any possible value, very less[clarify] distinction can be made between words belonging to different categories. So, to enhance the distinction between words belonging to different categories, restrictions were imposed on the warping function slope.

Correlation power analysis

Unstable clocks are used to defeat naive power analysis. Several techniques are used to counter this defense, one of which is dynamic time warping.

Finance and econometrics

Dynamic time warping is used in finance and econometrics to assess the quality of the prediction versus real-world data.[32][33][34]

Remove ads

See also

References

Further reading

Wikiwand - on

Seamless Wikipedia browsing. On steroids.

Remove ads