Transfer learning

Machine learning technique From Wikipedia, the free encyclopedia

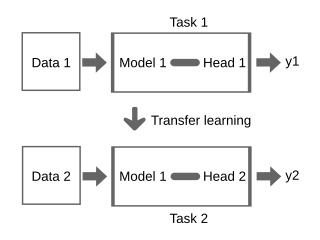

Transfer learning (TL) is a technique in machine learning (ML) in which knowledge learned from a task is re-used in order to boost performance on a related task.[1] For example, for image classification, knowledge gained while learning to recognize cars could be applied when trying to recognize trucks. This topic is related to the psychological literature on transfer of learning, although practical ties between the two fields are limited. Reusing/transferring information from previously learned tasks to new tasks has the potential to significantly improve learning efficiency.[2]

Since transfer learning makes use of training with multiple objective functions it is related to cost-sensitive machine learning and multi-objective optimization.[3]

History

In 1976, Bozinovski and Fulgosi published a paper addressing transfer learning in neural network training.[4][5] The paper gives a mathematical and geometrical model of the topic. In 1981, a report considered the application of transfer learning to a dataset of images representing letters of computer terminals, experimentally demonstrating positive and negative transfer learning.[6]

In 1992, Lorien Pratt formulated the discriminability-based transfer (DBT) algorithm.[7]

By 1998, the field had advanced to include multi-task learning,[8] along with more formal theoretical foundations.[9] Influential publications on transfer learning include the book Learning to Learn in 1998,[10] a 2009 survey[11] and a 2019 survey.[12]

Ng said in his NIPS 2016 tutorial[13][14] that TL would become the next driver of machine learning commercial success after supervised learning.

In the 2020 paper, "Rethinking Pre-Training and self-training",[15] Zoph et al. reported that pre-training can hurt accuracy, and advocate self-training instead.

Definition

The definition of transfer learning is given in terms of domains and tasks. A domain consists of: a feature space and a marginal probability distribution , where . Given a specific domain, , a task consists of two components: a label space and an objective predictive function . The function is used to predict the corresponding label of a new instance . This task, denoted by , is learned from the training data consisting of pairs , where and .[16]

Given a source domain and learning task , a target domain and learning task , where , or , transfer learning aims to help improve the learning of the target predictive function in using the knowledge in and .[16]

Applications

Summarize

Perspective

Algorithms are available for transfer learning in Markov logic networks[17] and Bayesian networks.[18] Transfer learning has been applied to cancer subtype discovery,[19] building utilization,[20][21] general game playing,[22] text classification,[23][24] digit recognition,[25] medical imaging and spam filtering.[26]

In 2020, it was discovered that, due to their similar physical natures, transfer learning is possible between electromyographic (EMG) signals from the muscles and classifying the behaviors of electroencephalographic (EEG) brainwaves, from the gesture recognition domain to the mental state recognition domain. It was noted that this relationship worked in both directions, showing that electroencephalographic can likewise be used to classify EMG.[27] The experiments noted that the accuracy of neural networks and convolutional neural networks were improved[28] through transfer learning both prior to any learning (compared to standard random weight distribution) and at the end of the learning process (asymptote). That is, results are improved by exposure to another domain. Moreover, the end-user of a pre-trained model can change the structure of fully-connected layers to improve performance.[29]

See also

References

Sources

Wikiwand - on

Seamless Wikipedia browsing. On steroids.