Loading AI tools

Score from a test designed to assess intelligence From Wikipedia, the free encyclopedia

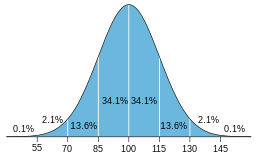

An intelligence quotient (IQ) is a total score derived from a set of standardized tests or subtests designed to assess human intelligence.[1] Originally, IQ was a score obtained by dividing a person's mental age score, obtained by administering an intelligence test, by the person's chronological age, both expressed in terms of years and months. The resulting fraction (quotient) was multiplied by 100 to obtain the IQ score.[2] For modern IQ tests, the raw score is transformed to a normal distribution with mean 100 and standard deviation 15.[3] This results in approximately two-thirds of the population scoring between IQ 85 and IQ 115 and about 2 percent each above 130 and below 70.[4][5]

| Intelligence quotient | |

|---|---|

![[picture of an example IQ test item]](http://upload.wikimedia.org/wikipedia/commons/thumb/e/ec/Raven_Matrix.svg/290px-Raven_Matrix.svg.png) One kind of IQ test item, modelled after items in the Raven's Progressive Matrices test | |

| ICD-10-PCS | Z01.8 |

| ICD-9-CM | 94.01 |

Scores from intelligence tests are estimates of intelligence. Unlike, for example, distance and mass, a concrete measure of intelligence cannot be achieved given the abstract nature of the concept of "intelligence".[6] IQ scores have been shown to be associated with such factors as nutrition,[7][8][9] parental socioeconomic status,[10][11] morbidity and mortality,[12][13] parental social status,[14] and perinatal environment.[15] While the heritability of IQ has been investigated for nearly a century, there is still debate about the significance of heritability estimates[16][17] and the mechanisms of inheritance.[18]

IQ scores are used for educational placement, assessment of intellectual ability, and evaluating job applicants. In research contexts, they have been studied as predictors of job performance[19] and income.[20] They are also used to study distributions of psychometric intelligence in populations and the correlations between it and other variables. Raw scores on IQ tests for many populations have been rising at an average rate that scales to three IQ points per decade since the early 20th century, a phenomenon called the Flynn effect. Investigation of different patterns of increases in subtest scores can also inform current research on human intelligence.

Historically, many proponents of IQ testing have been eugenicists who used pseudoscience to push now-debunked views of racial hierarchy in order to justify segregation and oppose immigration.[21][22] Such views are now rejected by a strong consensus of mainstream science, though fringe figures continue to promote them in pseudo-scholarship and popular culture.[23][24]

Historically, even before IQ tests were devised, there were attempts to classify people into intelligence categories by observing their behavior in daily life.[25][26] Those other forms of behavioral observation are still important for validating classifications based primarily on IQ test scores. Both intelligence classification by observation of behavior outside the testing room and classification by IQ testing depend on the definition of "intelligence" used in a particular case and on the reliability and error of estimation in the classification procedure.

The English statistician Francis Galton (1822–1911) made the first attempt at creating a standardized test for rating a person's intelligence. A pioneer of psychometrics and the application of statistical methods to the study of human diversity and the study of inheritance of human traits, he believed that intelligence was largely a product of heredity (by which he did not mean genes, although he did develop several pre-Mendelian theories of particulate inheritance).[27][28][29] He hypothesized that there should exist a correlation between intelligence and other observable traits such as reflexes, muscle grip, and head size.[30] He set up the first mental testing center in the world in 1882 and he published "Inquiries into Human Faculty and Its Development" in 1883, in which he set out his theories. After gathering data on a variety of physical variables, he was unable to show any such correlation, and he eventually abandoned this research.[31][32]

French psychologist Alfred Binet, together with Victor Henri and Théodore Simon, had more success in 1905, when they published the Binet–Simon Intelligence test, which focused on verbal abilities. It was intended to identify "mental retardation" in school children,[33] but in specific contradistinction to claims made by psychiatrists that these children were "sick" (not "slow") and should therefore be removed from school and cared for in asylums.[34] The score on the Binet–Simon scale would reveal the child's mental age. For example, a six-year-old child who passed all the tasks usually passed by six-year-olds—but nothing beyond—would have a mental age that matched his chronological age, 6.0. (Fancher, 1985). Binet and Simon thought that intelligence was multifaceted, but came under the control of practical judgment.

In Binet and Simon's view, there were limitations with the scale and they stressed what they saw as the remarkable diversity of intelligence and the subsequent need to study it using qualitative, as opposed to quantitative, measures (White, 2000). American psychologist Henry H. Goddard published a translation of it in 1910. American psychologist Lewis Terman at Stanford University revised the Binet–Simon scale, which resulted in the Stanford revision of the Binet-Simon Intelligence Scale (1916). It became the most popular test in the United States for decades.[33][35][36][37]

The abbreviation "IQ" was coined by the psychologist William Stern for the German term Intelligenzquotient, his term for a scoring method for intelligence tests at University of Breslau he advocated in a 1912 book.[38]

The many different kinds of IQ tests include a wide variety of item content. Some test items are visual, while many are verbal. Test items vary from being based on abstract-reasoning problems to concentrating on arithmetic, vocabulary, or general knowledge.

The British psychologist Charles Spearman in 1904 made the first formal factor analysis of correlations between the tests. He observed that children's school grades across seemingly unrelated school subjects were positively correlated, and reasoned that these correlations reflected the influence of an underlying general mental ability that entered into performance on all kinds of mental tests. He suggested that all mental performance could be conceptualized in terms of a single general ability factor and a large number of narrow task-specific ability factors. Spearman named it g for "general factor" and labeled the specific factors or abilities for specific tasks s.[39] In any collection of test items that make up an IQ test, the score that best measures g is the composite score that has the highest correlations with all the item scores. Typically, the "g-loaded" composite score of an IQ test battery appears to involve a common strength in abstract reasoning across the test's item content.[citation needed]

During World War I, the Army needed a way to evaluate and assign recruits to appropriate tasks. This led to the development of several mental tests by Robert Yerkes, who worked with major hereditarians of American psychometrics—including Terman, Goddard—to write the test.[40] The testing generated controversy and much public debate in the United States. Nonverbal or "performance" tests were developed for those who could not speak English or were suspected of malingering.[33] Based on Goddard's translation of the Binet–Simon test, the tests had an impact in screening men for officer training:

...the tests did have a strong impact in some areas, particularly in screening men for officer training. At the start of the war, the army and national guard maintained nine thousand officers. By the end, two hundred thousand officers presided, and two- thirds of them had started their careers in training camps where the tests were applied. In some camps, no man scoring below C could be considered for officer training.[40]

In total 1.75 million men were tested, making the results the first mass-produced written tests of intelligence, though considered dubious and non-usable, for reasons including high variability of test implementation throughout different camps and questions testing for familiarity with American culture rather than intelligence.[40] After the war, positive publicity promoted by army psychologists helped to make psychology a respected field.[41] Subsequently, there was an increase in jobs and funding in psychology in the United States.[42] Group intelligence tests were developed and became widely used in schools and industry.[43]

The results of these tests, which at the time reaffirmed contemporary racism and nationalism, are considered controversial and dubious, having rested on certain contested assumptions: that intelligence was heritable, innate, and could be relegated to a single number, the tests were enacted systematically, and test questions actually tested for innate intelligence rather than subsuming environmental factors.[40] The tests also allowed for the bolstering of jingoist narratives in the context of increased immigration, which may have influenced the passing of the Immigration Restriction Act of 1924.[40]

L.L. Thurstone argued for a model of intelligence that included seven unrelated factors (verbal comprehension, word fluency, number facility, spatial visualization, associative memory, perceptual speed, reasoning, and induction). While not widely used, Thurstone's model influenced later theories.[33]

David Wechsler produced the first version of his test in 1939. It gradually became more popular and overtook the Stanford–Binet in the 1960s. It has been revised several times, as is common for IQ tests, to incorporate new research. One explanation is that psychologists and educators wanted more information than the single score from the Binet. Wechsler's ten or more subtests provided this. Another is that the Stanford–Binet test reflected mostly verbal abilities, while the Wechsler test also reflected nonverbal abilities. The Stanford–Binet has also been revised several times and is now similar to the Wechsler in several aspects, but the Wechsler continues to be the most popular test in the United States.[33]

Eugenics, a set of beliefs and practices aimed at improving the genetic quality of the human population by excluding people and groups judged to be inferior and promoting those judged to be superior,[44][45][46] played a significant role in the history and culture of the United States during the Progressive Era, from the late 19th century until US involvement in World War II.[47][48]

The American eugenics movement was rooted in the biological determinist ideas of the British Scientist Sir Francis Galton. In 1883, Galton first used the word eugenics to describe the biological improvement of human genes and the concept of being "well-born".[49][50] He believed that differences in a person's ability were acquired primarily through genetics and that eugenics could be implemented through selective breeding in order for the human race to improve in its overall quality, therefore allowing for humans to direct their own evolution.[51]

Henry H. Goddard was a eugenicist. In 1908, he published his own version, The Binet and Simon Test of Intellectual Capacity, and cordially promoted the test. He quickly extended the use of the scale to the public schools (1913), to immigration (Ellis Island, 1914) and to a court of law (1914).[52]

Unlike Galton, who promoted eugenics through selective breeding for positive traits, Goddard went with the US eugenics movement to eliminate "undesirable" traits.[53] Goddard used the term "feeble-minded" to refer to people who did not perform well on the test. He argued that "feeble-mindedness" was caused by heredity, and thus feeble-minded people should be prevented from giving birth, either by institutional isolation or sterilization surgeries.[52] At first, sterilization targeted the disabled, but was later extended to poor people. Goddard's intelligence test was endorsed by the eugenicists to push for laws for forced sterilization. Different states adopted the sterilization laws at different paces. These laws, whose constitutionality was upheld by the Supreme Court in their 1927 ruling Buck v. Bell, forced over 60,000 people to go through sterilization in the United States.[54]

California's sterilization program was so effective that the Nazis turned to the government for advice on how to prevent the birth of the "unfit".[55] While the US eugenics movement lost much of its momentum in the 1940s in view of the horrors of Nazi Germany, advocates of eugenics (including Nazi geneticist Otmar Freiherr von Verschuer) continued to work and promote their ideas in the United States.[55] In later decades, some eugenic principles have made a resurgence as a voluntary means of selective reproduction, with some calling them "new eugenics".[56] As it becomes possible to test for and correlate genes with IQ (and its proxies),[57] ethicists and embryonic genetic testing companies are attempting to understand the ways in which the technology can be ethically deployed.[58]

Raymond Cattell (1941) proposed two types of cognitive abilities in a revision of Spearman's concept of general intelligence. Fluid intelligence (Gf) was hypothesized as the ability to solve novel problems by using reasoning, and crystallized intelligence (Gc) was hypothesized as a knowledge-based ability that was very dependent on education and experience. In addition, fluid intelligence was hypothesized to decline with age, while crystallized intelligence was largely resistant to the effects of aging. The theory was almost forgotten, but was revived by his student John L. Horn (1966) who later argued Gf and Gc were only two among several factors, and who eventually identified nine or ten broad abilities. The theory continued to be called Gf-Gc theory.[33]

John B. Carroll (1993), after a comprehensive reanalysis of earlier data, proposed the three stratum theory, which is a hierarchical model with three levels. The bottom stratum consists of narrow abilities that are highly specialized (e.g., induction, spelling ability). The second stratum consists of broad abilities. Carroll identified eight second-stratum abilities. Carroll accepted Spearman's concept of general intelligence, for the most part, as a representation of the uppermost, third stratum.[59][60]

In 1999, a merging of the Gf-Gc theory of Cattell and Horn with Carroll's Three-Stratum theory has led to the Cattell–Horn–Carroll theory (CHC Theory), with g as the top of the hierarchy, ten broad abilities below, and further subdivided into seventy narrow abilities on the third stratum. CHC Theory has greatly influenced many of the current broad IQ tests.[33]

Modern tests do not necessarily measure all of these broad abilities. For example, quantitative knowledge and reading and writing ability may be seen as measures of school achievement and not IQ.[33] Decision speed may be difficult to measure without special equipment. g was earlier often subdivided into only Gf and Gc, which were thought to correspond to the nonverbal or performance subtests and verbal subtests in earlier versions of the popular Wechsler IQ test. More recent research has shown the situation to be more complex.[33] Modern comprehensive IQ tests do not stop at reporting a single IQ score. Although they still give an overall score, they now also give scores for many of these more restricted abilities, identifying particular strengths and weaknesses of an individual.[33]

An alternative to standard IQ tests, meant to test the proximal development of children, originated in the writings of psychologist Lev Vygotsky (1896–1934) during his last two years of his life.[61][62] According to Vygotsky, the maximum level of complexity and difficulty of problems that a child is capable to solve under some guidance indicates their level of potential development. The difference between this level of potential and the lower level of unassisted performance indicates the child's zone of proximal development.[63] Combination of the two indexes—the level of actual and the zone of the proximal development—according to Vygotsky, provides a significantly more informative indicator of psychological development than the assessment of the level of actual development alone.[64][65] His ideas on the zone of development were later developed in a number of psychological and educational theories and practices, most notably under the banner of dynamic assessment, which seeks to measure developmental potential[66][67][68] (for instance, in the work of Reuven Feuerstein and his associates,[69] who has criticized standard IQ testing for its putative assumption or acceptance of "fixed and immutable" characteristics of intelligence or cognitive functioning). Dynamic assessment has been further elaborated in the work of Ann Brown, and John D. Bransford and in theories of multiple intelligences authored by Howard Gardner and Robert Sternberg.[70][71]

J.P. Guilford's Structure of Intellect (1967) model of intelligence used three dimensions, which, when combined, yielded a total of 120 types of intelligence. It was popular in the 1970s and early 1980s, but faded owing to both practical problems and theoretical criticisms.[33]

Alexander Luria's earlier work on neuropsychological processes led to the PASS theory (1997). It argued that only looking at one general factor was inadequate for researchers and clinicians who worked with learning disabilities, attention disorders, intellectual disability, and interventions for such disabilities. The PASS model covers four kinds of processes (planning process, attention/arousal process, simultaneous processing, and successive processing). The planning processes involve decision making, problem solving, and performing activities and require goal setting and self-monitoring.

The attention/arousal process involves selectively attending to a particular stimulus, ignoring distractions, and maintaining vigilance. Simultaneous processing involves the integration of stimuli into a group and requires the observation of relationships. Successive processing involves the integration of stimuli into serial order. The planning and attention/arousal components comes from structures located in the frontal lobe, and the simultaneous and successive processes come from structures located in the posterior region of the cortex.[72][73][74] It has influenced some recent IQ tests, and been seen as a complement to the Cattell–Horn–Carroll theory described above.[33]

There are a variety of individually administered IQ tests in use in the English-speaking world.[75][76][77] The most commonly used individual IQ test series is the Wechsler Adult Intelligence Scale (WAIS) for adults and the Wechsler Intelligence Scale for Children (WISC) for school-age test-takers. Other commonly used individual IQ tests (some of which do not label their standard scores as "IQ" scores) include the current versions of the Stanford–Binet Intelligence Scales, Woodcock–Johnson Tests of Cognitive Abilities, the Kaufman Assessment Battery for Children, the Cognitive Assessment System, and the Differential Ability Scales.

There are various other IQ tests, including:

IQ scales are ordinally scaled.[81][82][83][84][85] The raw score of the norming sample is usually (rank order) transformed to a normal distribution with mean 100 and standard deviation 15.[3] While one standard deviation is 15 points, and two SDs are 30 points, and so on, this does not imply that mental ability is linearly related to IQ, such that IQ 50 would mean half the cognitive ability of IQ 100. In particular, IQ points are not percentage points.

| Pupil | KABC-II | WISC-III | WJ-III |

|---|---|---|---|

| A | 90 | 95 | 111 |

| B | 125 | 110 | 105 |

| C | 100 | 93 | 101 |

| D | 116 | 127 | 118 |

| E | 93 | 105 | 93 |

| F | 106 | 105 | 105 |

| G | 95 | 100 | 90 |

| H | 112 | 113 | 103 |

| I | 104 | 96 | 97 |

| J | 101 | 99 | 86 |

| K | 81 | 78 | 75 |

| L | 116 | 124 | 102 |

Psychometricians generally regard IQ tests as having high statistical reliability.[14][88] Reliability represents the measurement consistency of a test.[89] A reliable test produces similar scores upon repetition.[89] On aggregate, IQ tests exhibit high reliability, although test-takers may have varying scores when taking the same test on differing occasions, and may have varying scores when taking different IQ tests at the same age. Like all statistical quantities, any particular estimate of IQ has an associated standard error that measures uncertainty about the estimate. For modern tests, the confidence interval can be approximately 10 points and reported standard error of measurement can be as low as about three points.[90] Reported standard error may be an underestimate, as it does not account for all sources of error.[91]

Outside influences such as low motivation or high anxiety can occasionally lower a person's IQ test score.[89] For individuals with very low scores, the 95% confidence interval may be greater than 40 points, potentially complicating the accuracy of diagnoses of intellectual disability.[92] By the same token, high IQ scores are also significantly less reliable than those near to the population median.[93] Reports of IQ scores much higher than 160 are considered dubious.[94]

Reliability and validity are very different concepts. While reliability reflects reproducibility, validity refers to whether the test measures what it purports to measure.[89] While IQ tests are generally considered to measure some forms of intelligence, they may fail to serve as an accurate measure of broader definitions of human intelligence inclusive of, for example, creativity and social intelligence. For this reason, psychologist Wayne Weiten argues that their construct validity must be carefully qualified, and not be overstated.[89] According to Weiten, "IQ tests are valid measures of the kind of intelligence necessary to do well in academic work. But if the purpose is to assess intelligence in a broader sense, the validity of IQ tests is questionable."[89]

Some scientists have disputed the value of IQ as a measure of intelligence altogether. In The Mismeasure of Man (1981, expanded edition 1996), evolutionary biologist Stephen Jay Gould compared IQ testing with the now-discredited practice of determining intelligence via craniometry, arguing that both are based on the fallacy of reification, "our tendency to convert abstract concepts into entities".[95] Gould's argument sparked a great deal of debate,[96][97] and the book is listed as one of Discover Magazine's "25 Greatest Science Books of All Time".[98]

Along these same lines, critics such as Keith Stanovich do not dispute the capacity of IQ test scores to predict some kinds of achievement, but argue that basing a concept of intelligence on IQ test scores alone neglects other important aspects of mental ability.[14][99] Robert Sternberg, another significant critic of IQ as the main measure of human cognitive abilities, argued that reducing the concept of intelligence to the measure of g does not fully account for the different skills and knowledge types that produce success in human society.[100]

Despite these objections, clinical psychologists generally regard IQ scores as having sufficient statistical validity for many clinical purposes.[specify][33][101]

Differential item functioning (DIF), sometimes referred to as measurement bias, is a phenomenon when participants from different groups (e.g. gender, race, disability) with the same latent abilities give different answers to specific questions on the same IQ test.[102] DIF analysis measures such specific items on a test alongside measuring participants' latent abilities on other similar questions. A consistent different group response to a specific question among similar types of questions can indicate an effect of DIF. It does not count as differential item functioning if both groups have an equally valid chance of giving different responses to the same questions. Such bias can be a result of culture, educational level and other factors that are independent of group traits. DIF is only considered if test-takers from different groups with the same underlying latent ability level have a different chance of giving specific responses.[103] Such questions are usually removed in order to make the test equally fair for both groups. Common techniques for analyzing DIF are item response theory (IRT) based methods, Mantel-Haenszel, and logistic regression.[103]

A 2005 study found that "differential validity in prediction suggests that the WAIS-R test may contain cultural influences that reduce the validity of the WAIS-R as a measure of cognitive ability for Mexican American students,"[104] indicating a weaker positive correlation relative to sampled white students. Other recent studies have questioned the culture-fairness of IQ tests when used in South Africa.[105][106] Standard intelligence tests, such as the Stanford–Binet, are often inappropriate for autistic children; the alternative of using developmental or adaptive skills measures are relatively poor measures of intelligence in autistic children, and may have resulted in incorrect claims that a majority of autistic children are of low intelligence.[107]

Since the early 20th century, raw scores on IQ tests have increased in most parts of the world.[108][109][110] When a new version of an IQ test is normed, the standard scoring is set so performance at the population median results in a score of IQ 100. The phenomenon of rising raw score performance means if test-takers are scored by a constant standard scoring rule, IQ test scores have been rising at an average rate of around three IQ points per decade. This phenomenon was named the Flynn effect in the book The Bell Curve after James R. Flynn, the author who did the most to bring this phenomenon to the attention of psychologists.[111][112]

Researchers have been exploring the issue of whether the Flynn effect is equally strong on performance of all kinds of IQ test items, whether the effect may have ended in some developed nations, whether there are social subgroup differences in the effect, and what possible causes of the effect might be.[113] A 2011 textbook, IQ and Human Intelligence, by N. J. Mackintosh, noted the Flynn effect demolishes the fears that IQ would be decreased. He also asks whether it represents a real increase in intelligence beyond IQ scores.[114] A 2011 psychology textbook, lead authored by Harvard Psychologist Professor Daniel Schacter, noted that humans' inherited intelligence could be going down while acquired intelligence goes up.[115]

Research has suggested that the Flynn effect has slowed or reversed course in some Western countries beginning in the late 20th century. The phenomenon has been termed the negative Flynn effect.[116] A study of Norwegian military conscripts' test records found that IQ scores have been falling for generations born after the year 1975, and that the underlying cause of both initial increasing and subsequent falling trends appears to be environmental rather than genetic.[116]

Ronald S. Wilson is largely credited with the idea that IQ heritability rises with age.[117] Researchers building on this phenomenon dubbed it "The Wilson Effect," named after the behavioral geneticist.[118] A paper by Thomas J. Bouchard Jr., examining twin and adoption studies, including twins "reared apart," finds that IQ "reaches an asymptote at about 0.80 at 18–20 years of age and continuing at that level well into adulthood. In the aggregate, the studies also confirm that shared environmental influence decreases across age, approximating about 0.10 at 18–20 years of age and continuing at that level into adulthood."[118] IQ can change to some degree over the course of childhood.[119] In one longitudinal study, the mean IQ scores of tests at ages 17 and 18 were correlated at r = 0.86 with the mean scores of tests at ages five, six, and seven and at r = 0.96[further explanation needed] with the mean scores of tests at ages 11, 12, and 13.[14]

The current consensus is that fluid intelligence generally declines with age after early adulthood, while crystallized intelligence remains intact.[120] However, the exact peak age of fluid intelligence or crystallized intelligence remains elusive. Cross-sectional studies usually show that especially fluid intelligence peaks at a relatively young age (often in the early adulthood) while longitudinal data mostly show that intelligence is stable until mid-adulthood or later. Subsequently, intelligence seems to decline slowly.[121]

For decades, practitioners' handbooks and textbooks on IQ testing have reported IQ declines with age after the beginning of adulthood. However, later researchers pointed out this phenomenon is related to the Flynn effect and is in part a cohort effect rather than a true aging effect. A variety of studies of IQ and aging have been conducted since the norming of the first Wechsler Intelligence Scale drew attention to IQ differences in different age groups of adults. Both cohort effects (the birth year of the test-takers) and practice effects (test-takers taking the same form of IQ test more than once) must be controlled to gain accurate data.[inconsistent] It is unclear whether any lifestyle intervention can preserve fluid intelligence into older ages.[120]

Environmental and genetic factors play a role in determining IQ. Their relative importance has been the subject of much research and debate.[122]

The general figure for the heritability of IQ, according to an American Psychological Association report, is 0.45 for children, and rises to around 0.75 for late adolescents and adults.[14] Heritability measures for g factor in infancy are as low as 0.2, around 0.4 in middle childhood, and as high as 0.9 in adulthood.[123][124] One proposed explanation is that people with different genes tend to reinforce the effects of those genes, for example by seeking out different environments.[14][125]

Family members have aspects of environments in common (for example, characteristics of the home). This shared family environment accounts for 0.25–0.35 of the variation in IQ in childhood. By late adolescence, it is quite low (zero in some studies). The effect for several other psychological traits is similar. These studies have not looked at the effects of extreme environments, such as in abusive families.[14][126][127][128]

Although parents treat their children differently, such differential treatment explains only a small amount of nonshared environmental influence. One suggestion is that children react differently to the same environment because of different genes. More likely influences may be the impact of peers and other experiences outside the family.[14][127]

A very large proportion of the over 17,000 human genes are thought to have an effect on the development and functionality of the brain.[129] While a number of individual genes have been reported to be associated with IQ, none have a strong effect. Deary and colleagues (2009) reported that no finding of a strong single gene effect on IQ has been replicated.[130] Recent findings of gene associations with normally varying intellectual differences in adults and children continue to show weak effects for any one gene.[131][132]

A 2017 meta-analysis conducted on approximately 78,000 subjects identified 52 genes associated with intelligence.[133] FNBP1L is reported to be the single gene most associated with both adult and child intelligence.[134]

David Rowe reported an interaction of genetic effects with socioeconomic status, such that the heritability was high in high-SES families, but much lower in low-SES families.[135] In the US, this has been replicated in infants,[136] children,[137] adolescents,[138] and adults.[139] Outside the US, studies show no link between heritability and SES.[140] Some effects may even reverse sign outside the US.[140][141]

Dickens and Flynn (2001) have argued that genes for high IQ initiate an environment-shaping feedback cycle, with genetic effects causing bright children to seek out more stimulating environments that then further increase their IQ. In Dickens' model, environment effects are modeled as decaying over time. In this model, the Flynn effect can be explained by an increase in environmental stimulation independent of it being sought out by individuals. The authors suggest that programs aiming to increase IQ would be most likely to produce long-term IQ gains if they enduringly raised children's drive to seek out cognitively demanding experiences.[142][143]

In general, educational interventions, as those described below, have shown short-term effects on IQ, but long-term follow-up is often missing. For example, in the US, very large intervention programs such as the Head Start Program have not produced lasting gains in IQ scores. Even when students improve their scores on standardized tests, they do not always improve their cognitive abilities, such as memory, attention and speed.[144] More intensive, but much smaller projects, such as the Abecedarian Project, have reported lasting effects, often on socioeconomic status variables, rather than IQ.[14]

Recent studies have shown that training in using one's working memory may increase IQ. A study on young adults published in April 2008 by a team from the Universities of Michigan and Bern supports the possibility of the transfer of fluid intelligence from specifically designed working memory training.[145] Further research will be needed to determine nature, extent and duration of the proposed transfer. Among other questions, it remains to be seen whether the results extend to other kinds of fluid intelligence tests than the matrix test used in the study, and if so, whether, after training, fluid intelligence measures retain their correlation with educational and occupational achievement or if the value of fluid intelligence for predicting performance on other tasks changes. It is also unclear whether the training is durable for extended periods of time.[146]

Musical training in childhood correlates with higher than average IQ.[147][148] However, a study of 10,500 twins found no effects on IQ, suggesting that the correlation was caused by genetic confounders.[149] A meta-analysis concluded that "Music training does not reliably enhance children and young adolescents' cognitive or academic skills, and that previous positive findings were probably due to confounding variables."[150]

It is popularly thought that listening to classical music raises IQ. However, multiple attempted replications (e.g.[151]) have shown that this is at best a short-term effect (lasting no longer than 10 to 15 minutes), and is not related to IQ-increase.[152]

Several neurophysiological factors have been correlated with intelligence in humans, including the ratio of brain weight to body weight and the size, shape, and activity level of different parts of the brain. Specific features that may affect IQ include the size and shape of the frontal lobes, the amount of blood and chemical activity in the frontal lobes, the total amount of gray matter in the brain, the overall thickness of the cortex, and the glucose metabolic rate.[153]

Health is important in understanding differences in IQ test scores and other measures of cognitive ability. Several factors can lead to significant cognitive impairment, particularly if they occur during pregnancy and childhood when the brain is growing and the blood–brain barrier is less effective. Such impairment may sometimes be permanent, or sometimes be partially or wholly compensated for by later growth.[154]

Since about 2010, researchers such as Eppig, Hassel, and MacKenzie have found a very close and consistent link between IQ scores and infectious diseases, especially in the infant and preschool populations and the mothers of these children.[155] They have postulated that fighting infectious diseases strains the child's metabolism and prevents full brain development. Hassel postulated that it is by far the most important factor in determining population IQ. However, they also found that subsequent factors such as good nutrition and regular quality schooling can offset early negative effects to some extent.

Developed nations have implemented several health policies regarding nutrients and toxins known to influence cognitive function. These include laws requiring fortification of certain food products[156] and laws establishing safe levels of pollutants (e.g. lead, mercury, and organochlorides). Improvements in nutrition, and in public policy in general, have been implicated in IQ increases.[157]

Cognitive epidemiology is a field of research that examines the associations between intelligence test scores and health. Researchers in the field argue that intelligence measured at an early age is an important predictor of later health and mortality differences.[13]

The American Psychological Association's report Intelligence: Knowns and Unknowns states that wherever it has been studied, children with high scores on tests of intelligence tend to learn more of what is taught in school than their lower-scoring peers. The correlation between IQ scores and grades is about .50. This means that the explained variance is 25%. Achieving good grades depends on many factors other than IQ, such as "persistence, interest in school, and willingness to study" (p. 81).[14]

It has been found that the correlation of IQ scores with school performance depends on the IQ measurement used. For undergraduate students, the Verbal IQ as measured by WAIS-R has been found to correlate significantly (0.53) with the grade point average (GPA) of the last 60 hours (credits). In contrast, Performance IQ correlation with the same GPA was only 0.22 in the same study.[158]

Some measures of educational aptitude correlate highly with IQ tests – for instance, Frey & Detterman (2004) reported a correlation of 0.82 between g (general intelligence factor) and SAT scores;[159] another research found a correlation of 0.81 between g and GCSE scores, with the explained variance ranging "from 58.6% in Mathematics and 48% in English to 18.1% in Art and Design".[160]

According to Schmidt and Hunter, "for hiring employees without previous experience in the job the most valid predictor of future performance is general mental ability."[19] The validity of IQ as a predictor of job performance is above zero for all work studied to date, but varies with the type of job and across different studies, ranging from 0.2 to 0.6.[161] The correlations were higher when the unreliability of measurement methods was controlled for.[14] While IQ is more strongly correlated with reasoning and less so with motor function,[162] IQ-test scores predict performance ratings in all occupations.[19]

That said, for highly qualified activities (research, management) low IQ scores are more likely to be a barrier to adequate performance, whereas for minimally-skilled activities, athletic strength (manual strength, speed, stamina, and coordination) is more likely to influence performance.[19] The prevailing view among academics is that it is largely through the quicker acquisition of job-relevant knowledge that higher IQ mediates job performance. This view has been challenged by Byington & Felps (2010), who argued that "the current applications of IQ-reflective tests allow individuals with high IQ scores to receive greater access to developmental resources, enabling them to acquire additional capabilities over time, and ultimately perform their jobs better."[163]

Newer studies find that the effects of IQ on job performance have been greatly overestimated. The current estimates of the correlation between job performance and IQ are about 0.23 correcting for unreliability and range restriction.[164][165]

In establishing a causal direction to the link between IQ and work performance, longitudinal studies by Watkins and others suggest that IQ exerts a causal influence on future academic achievement, whereas academic achievement does not substantially influence future IQ scores.[166] Treena Eileen Rohde and Lee Anne Thompson write that general cognitive ability, but not specific ability scores, predict academic achievement, with the exception that processing speed and spatial ability predict performance on the SAT math beyond the effect of general cognitive ability.[167]

However, large-scale longitudinal studies indicate an increase in IQ translates into an increase in performance at all levels of IQ: i.e. ability and job performance are monotonically linked at all IQ levels.[168][169]

It has been suggested that "in economic terms it appears that the IQ score measures something with decreasing marginal value" and it "is important to have enough of it, but having lots and lots does not buy you that much".[170][171]

The link from IQ to wealth is much less strong than that from IQ to job performance. Some studies indicate that IQ is unrelated to net worth.[172][173] The American Psychological Association's 1995 report Intelligence: Knowns and Unknowns stated that IQ scores accounted for about a quarter of the social status variance and one-sixth of the income variance. Statistical controls for parental SES eliminate about a quarter of this predictive power. Psychometric intelligence appears as only one of a great many factors that influence social outcomes.[14] Charles Murray (1998) showed a more substantial effect of IQ on income independent of family background.[174] In a meta-analysis, Strenze (2006) reviewed much of the literature and estimated the correlation between IQ and income to be about 0.23.[20]

Some studies assert that IQ only accounts for (explains) a sixth of the variation in income because many studies are based on young adults, many of whom have not yet reached their peak earning capacity, or even their education. On pg 568 of The g Factor, Arthur Jensen says that although the correlation between IQ and income averages a moderate 0.4 (one-sixth or 16% of the variance), the relationship increases with age, and peaks at middle age when people have reached their maximum career potential. In the book, A Question of Intelligence, Daniel Seligman cites an IQ income correlation of 0.5 (25% of the variance).

A 2002 study further examined the impact of non-IQ factors on income and concluded that an individual's location, inherited wealth, race, and schooling are more important as factors in determining income than IQ.[175]

The American Psychological Association's 1995 report Intelligence: Knowns and Unknowns stated that the correlation between IQ and crime was −0.2. This association is generally regarded as small and prone to disappearance or a substantial reduction after controlling for the proper covariates, being much smaller than typical sociological correlates.[176] It was −0.19 between IQ scores and the number of juvenile offenses in a large Danish sample; with social class controlled for, the correlation dropped to −0.17. A correlation of 0.20 means that the explained variance accounts for 4% of the total variance. The causal links between psychometric ability and social outcomes may be indirect. Children with poor scholastic performance may feel alienated. Consequently, they may be more likely to engage in delinquent behavior, compared to other children who do well.[14]

In his book The g Factor (1998), Arthur Jensen cited data which showed that, regardless of race, people with IQs between 70 and 90 have higher crime rates than people with IQs below or above this range, with the peak range being between 80 and 90.

The 2009 Handbook of Crime Correlates stated that reviews have found that around eight IQ points, or 0.5 SD, separate criminals from the general population, especially for persistent serious offenders. It has been suggested that this simply reflects that "only dumb ones get caught" but there is similarly a negative relation between IQ and self-reported offending. That children with conduct disorder have lower IQ than their peers "strongly argues" for the theory.[177]

A study of the relationship between US county-level IQ and US county-level crime rates found that higher average IQs were very weakly associated with lower levels of property crime, burglary, larceny rate, motor vehicle theft, violent crime, robbery, and aggravated assault. These results were "not confounded by a measure of concentrated disadvantage that captures the effects of race, poverty, and other social disadvantages of the county."[178] However, this study is limited in that it extrapolated Add Health estimates to the respondent's counties, and as the dataset was not designed to be representative on the state or county level, it may not be generalizable.[179]

It has also been shown that the effect of IQ is heavily dependent on socioeconomic status and that it cannot be easily controlled away, with many methodological considerations being at play.[180] Indeed, there is evidence that the small relationship is mediated by well-being, substance abuse, and other confounding factors that prohibit simple causal interpretation.[181] A recent meta-analysis has shown that the relationship is only observed in higher risk populations such as those in poverty without direct effect, but without any causal interpretation.[182] A nationally representative longitudinal study has shown that this relationship is entirely mediated by school performance.[183]

Multiple studies conducted in Scotland have found that higher IQs in early life are associated with lower mortality and morbidity rates later in life.[184][185]

| Accomplishment | IQ | Test/study | Year |

|---|---|---|---|

| MDs, JDs, and PhDs | 125 | WAIS-R | 1987 |

| College graduates | 112 | KAIT | 2000 |

| K-BIT | 1992 | ||

| 115 | WAIS-R | ||

| 1–3 years of college | 104 | KAIT | |

| K-BIT | |||

| 105–110 | WAIS-R | ||

| Clerical and sales workers | 100–105 | ||

| High school graduates, skilled workers (e.g., electricians, cabinetmakers) | 100 | KAIT | |

| WAIS-R | |||

| 97 | K-BIT | ||

| 1–3 years of high school (completed 9–11 years of school) | 94 | KAIT | |

| 90 | K-BIT | ||

| 95 | WAIS-R | ||

| Semi-skilled workers (e.g. truck drivers, factory workers) | 90–95 | ||

| Elementary school graduates (completed eighth grade) | 90 | ||

| Elementary school dropouts (completed 0–7 years of school) | 80–85 | ||

| Have 50/50 chance of reaching high school | 75 |

| Accomplishment | IQ | Test/study | Year |

|---|---|---|---|

| Professional and technical | 112 | ||

| Managers and administrators | 104 | ||

| Clerical workers, sales workers, skilled workers, craftsmen, and foremen | 101 | ||

| Semi-skilled workers (operatives, service workers, including private household) | 92 | ||

| Unskilled workers | 87 |

| Accomplishment | IQ | Test/study | Year |

|---|---|---|---|

| Adults can harvest vegetables, repair furniture | 60 | ||

| Adults can do domestic work | 50 |

There is considerable variation within and overlap among these categories. People with high IQs are found at all levels of education and occupational categories. The biggest difference occurs for low IQs with only an occasional college graduate or professional scoring below 90.[33]

Among the most controversial issues related to the study of intelligence is the observation that IQ scores vary on average between ethnic and racial groups, though these differences have fluctuated and in many cases steadily decreased over time.[189] While there is little scholarly debate about the continued existence of some of these differences, the current scientific consensus is that they stem from environmental rather than genetic causes.[190][191][192] The existence of differences in IQ between the sexes has been debated, and largely depends on which tests are performed.[193][194]

While the concept of "race" is a social construct,[195] discussions of a purported relationship between race and intelligence, as well as claims of genetic differences in intelligence along racial lines, have appeared in both popular science and academic research since the modern concept of race was first introduced.

Genetics do not explain differences in IQ test performance between racial or ethnic groups.[23][190][191][192] Despite the tremendous amount of research done on the topic, no scientific evidence has emerged that the average IQ scores of different population groups can be attributed to genetic differences between those groups.[196][197][198] In recent decades, as understanding of human genetics has advanced, claims of inherent differences in intelligence between races have been broadly rejected by scientists on both theoretical and empirical grounds.[199][192][200][201][197]

Growing evidence indicates that environmental factors, not genetic ones, explain the racial IQ gap.[201][199][192] A 1996 task force investigation on intelligence sponsored by the American Psychological Association concluded that "because ethnic differences in intelligence reflect complex patterns, no overall generalization about them is appropriate," with environmental factors the most plausible reason for the shrinking gap.[14] A systematic analysis by William Dickens and James Flynn (2006) showed the gap between black and white Americans to have closed dramatically during the period between 1972 and 2002, suggesting that, in their words, the "constancy of the Black–White IQ gap is a myth".[202] The effects of stereotype threat have been proposed as an explanation for differences in IQ test performance between racial groups,[203][204] as have issues related to cultural difference and access to education.[205][206]

Despite the strong scientific consensus to the contrary, fringe figures continue to promote scientific racism about group-level IQ averages in pseudo-scholarship and popular culture.[23][24][21]

With the advent of the concept of g or general intelligence, many researchers have found that there are no significant sex differences in average IQ,[194][207][208] though ability in particular types of intelligence does vary.[193][208] Thus, while some test batteries show slightly greater intelligence in males, others show greater intelligence in females.[193][208] In particular, studies have shown female subjects performing better on tasks related to verbal ability,[194] and males performing better on tasks related to rotation of objects in space, often categorized as spatial ability.[209] These differences remain, as Hunt (2011) observes, "even though men and women are essentially equal in general intelligence".

Some research indicates that male advantages on some cognitive tests are minimized when controlling for socioeconomic factors.[193][207] Other research has concluded that there is slightly larger variability in male scores in certain areas compared to female scores, which results in slightly more males than females in the top and bottom of the IQ distribution.[210]

The existence of differences between male and female performance on math-related tests is contested,[211] and a meta-analysis focusing on average gender differences in math performance found nearly identical performance for boys and girls.[212] Currently, most IQ tests, including popular batteries such as the WAIS and the WISC-R, are constructed so that there are no overall score differences between females and males.[14][213][214]

In the United States, certain public policies and laws regarding military service,[215][216] education, public benefits,[217] capital punishment,[110] and employment incorporate an individual's IQ into their decisions. However, in the case of Griggs v. Duke Power Co. in 1971, for the purpose of minimizing employment practices that disparately impacted racial minorities, the U.S. Supreme Court banned the use of IQ tests in employment, except when linked to job performance via a job analysis. Internationally, certain public policies, such as improving nutrition and prohibiting neurotoxins, have as one of their goals raising, or preventing a decline in, intelligence.

A diagnosis of intellectual disability is in part based on the results of IQ testing. Borderline intellectual functioning is the categorization of individuals of below-average cognitive ability (an IQ of 71–85), although not as low as those with an intellectual disability (70 or below).

In the United Kingdom, the eleven plus exam which incorporated an intelligence test has been used from 1945 to decide, at eleven years of age, which type of school a child should go to. They have been much less used since the widespread introduction of comprehensive schools.

IQ classification is the practice used by IQ test publishers for designating IQ score ranges into various categories with labels such as "superior" or "average".[187] IQ classification was preceded historically by attempts to classify human beings by general ability based on other forms of behavioral observation. Those other forms of behavioral observation are still important for validating classifications based on IQ tests.

There are social organizations, some international, which limit membership to people who have scores as high as or higher than the 98th percentile (two standard deviations above the mean) on some IQ test or equivalent. Mensa International is perhaps the best known of these. The largest 99.9th percentile (three standard deviations above the mean) society is the Triple Nine Society.

Seamless Wikipedia browsing. On steroids.

Every time you click a link to Wikipedia, Wiktionary or Wikiquote in your browser's search results, it will show the modern Wikiwand interface.

Wikiwand extension is a five stars, simple, with minimum permission required to keep your browsing private, safe and transparent.