Loading AI tools

System on a chip (SoC) designed by Apple Inc. From Wikipedia, the free encyclopedia

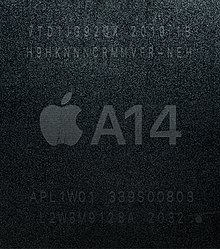

The Apple A14 Bionic is a 64-bit ARMv8.4-A[6] system on a chip (SoC)designed by Apple Inc., part of the Apple silicon series. It appears in the iPad Air (4th generation) and iPad (10th generation), as well as iPhone 12 Mini, iPhone 12, iPhone 12 Pro, and iPhone 12 Pro Max. Apple states that the central processing unit (CPU) performs up to 40% faster than the A12, while the graphics processing unit (GPU) is up to 30% faster than the A12. It also includes a 16-core neural engine and new machine learning matrix accelerators that perform twice and ten times as fast, respectively.[7][8]

| |

| General information | |

|---|---|

| Launched | September 15, 2020 |

| Designed by | Apple Inc. |

| Common manufacturer | |

| Product code | APL1W01[1] |

| Max. CPU clock rate | to 3.0 GHz[2] |

| Cache | |

| L2 cache | 8 MB (performance cores) 4 MB (efficient cores) |

| L4 cache | 16 MB (system cache) [3] |

| Architecture and classification | |

| Application | Mobile |

| Technology node | 5 nm (N5) |

| Microarchitecture | "Firestorm" and "Icestorm"[4][5] |

| Instruction set | ARMv8.4-A[6] |

| Physical specifications | |

| Transistors |

|

| Cores |

|

| GPU | Apple-designed 4 core |

| Products, models, variants | |

| Variant | |

| History | |

| Predecessor | Apple A13 Bionic |

| Successors | Apple A15 Bionic (iPhone) Apple M1 (iPad Air, iPad Pro) |

The Apple A14 Bionic features an Apple-designed 64-bit, six-core CPU, implementing ARMv8[6] with two high-performance cores called Firestorm and four energy-efficient cores called Icestorm.[5]

The A14 integrates an Apple-designed four-core GPU with 30% faster graphics performance than the A12.[8] The A14 includes dedicated neural network hardware that Apple calls a new 16-core Neural Engine.[8] The Neural Engine can perform 11 trillion operations per second.[8] In addition to the separate Neural Engine, the A14 CPU includes second-generation machine learning matrix scalar multiplication accelerators (which Apple calls AMX blocks).[8][9] The A14 also includes a new image processor with improved computational photography capabilities.[10]

A14 is manufactured by TSMC on their first-generation 5 nm fabrication process, N5. This makes the A14 the first commercially available product to be manufactured on a 5 nm process node.[11] The transistor count has increased to 11.8 billion, a 38.8% increase from the A13's transistor count of 8.5 billion.[12][13] According to Semianalysis, the die size of A14 processor is 88 mm2, with a transistor density of 134 million transistors per mm2.[14] It is manufactured in a package on package (PoP) together with 4 GB of LPDDR4X memory in the iPhone 12[1] and 6 GB of LPDDR4X memory in the iPhone 12 Pro.[1]

The A14 has video codec encoding support for HEVC and H.264. It has decoding support for HEVC, H.264, MPEG‑4 Part 2, and Motion JPEG.[15]

The A14 would be later used as the basis for the M1 series of chips, used in various Macintosh and iPad models.

The table below shows the various SoCs based on the "Firestorm" and "Icestorm" microarchitectures.

| Variant | CPU

cores (P+E) |

GPU cores |

GPU EU |

Graphics ALU |

Neural Engine cores | Neural Engine performance | Memory (GB) | Transistor count |

|---|---|---|---|---|---|---|---|---|

| A14 | 6 (2+4) | 4 | 64 | 512 | 16 | 11 TOPS | 4–6 | 11.8 billion |

| M1 | 8 (4+4) | 7 | 112 | 896 | 8–16 | 16 billion | ||

| M1 | 8 | 128 | 1024 | |||||

| M1 Pro | 8 (6+2) | 14 | 224 | 1792 | 16–32 | 34 billion | ||

| M1 Pro | 10 (8+2) | |||||||

| M1 Pro | 16 | 256 | 2048 | |||||

| M1 Max | 10 (8+2) | 24 | 384 | 3072 | 32–64 | 57 billion | ||

| M1 Max | 32 | 512 | 4096 | |||||

| M1 Ultra | 20 (16+4) | 48 | 768 | 6144 | 32 | 22 TOPS | 64–128 | 114 billion |

| M1 Ultra | 64 | 1024 | 8192 |

Seamless Wikipedia browsing. On steroids.

Every time you click a link to Wikipedia, Wiktionary or Wikiquote in your browser's search results, it will show the modern Wikiwand interface.

Wikiwand extension is a five stars, simple, with minimum permission required to keep your browsing private, safe and transparent.